One of the defining moments in the Gene Rodenberry’s “Star Trek” series, “The Next Generation,” was the episode when Data, the sole robotic member of the crew on the Enterprise was put on trial to determine whether he was sentient.

That would mean he was entitled to all the rights and privileges extended to his human crewmates.

Or was he simply a silicon-based robot, highly intelligent but with no human rights. (The court eventually ruled in Data’s favour.)

With AI poised to take on more and more big data and, more importantly, increasingly capable of autonomous learning and problem-solving, AI tests the limits of silicon-based intelligence and increasingly the limits of what it means to be human.

It already has demonstrated an astounding talent for autonomously operating drones, devising, carrying out and analyzing research projects, composing music in the style of major composers of the past and present, creating entirely novel music, paintings that fool the experts and writing poetry indistinguishable from poems created by humans. And increasingly it’s a problem for educators, as AI creates essays for students.

This talent for self-learning or what some in the field call “deep learning” raises questions about the lines between carbon-based intelligence – that’s us – and silicon-based intelligence (AI).

The arguments and counter-arguments on display in the fictional court in “Star Trek” centred on questions about what’s truly uniquely human. Clearly the borders between AI and humans are fraying.

It wasn’t so long ago that many gave little credence to the idea that animals possessed cognitive talents and a sense of altruism akin in nature, if not degree, to our own.

However, recent observations by primatologists, such as Frans De Waal, make clear that chimpanzees are capable of altruistic behaviour and providing long-term-care for disabled or sick band members.

Those talents aren’t limited to apes – they extend to whales, porpoises, elephants and even crows to name several other highly intelligent species.

Our reluctance to admit that moral behaviour is shared by other animals is more than mirrored by similar ignorance and prejudice toward members of our own species, including our reluctance to extend rights and privileges we enjoy, to those humans of differing colour, culture and beliefs, the underprivileged and those with differing sexual orientations. The list goes on.

Of course, it’s a whole other matter when it comes to inanimate AI. Few would argue that robots and AI warrant human rights – yet. Among the reasons for not doing so, even in a future when AI might well rival the comprehensive array of cognitive powers possessed by humans, is the human aversion for accepting computer-based machines – presumably without a soul or consciousness – as equals!

It’s a specious argument like so many philosophical arguments that lead nowhere. Speaking as a neurophysiologist and neurologist, when the heart stops, whatever soul might be lurking in me, and awareness I might have of the moment, is surely gone when my brain stops working.

What surely matters is whether AI can master the nuances of symbolic language, elements of social intelligence such as reading human intentions and feelings, the complexity of human relationships and developing a moral sense.

Should AI acquire these traits sometime in the future – then questions of whether the machine is conscious or not is surely irrelevant – AI will have achieved the prime attributes of sentient humans.

So far Google AI can translate and master many nuances of symbolic language, read human feelings and recognize human faces. The trajectory is that much of the rest is within Google AI’s grasp within two or three generations. That is scary, at least to me, if not to you.

There’s another issue here, too. If complex carbon-based life with intelligence equivalent to our own emerges elsewhere in the universe (as is likely to be the case given the billions of planets potentially capable of supporting carbon-based life), will we welcome that intelligence? Will we consider it as sentient?

Or what if life elsewhere in the universe turns out not to be carbon, but silicon-based? And if so, what will we make of it? Will we accept them as equals?

That’s really the question we should be asking. Maybe AI is a life form evolving in our midst, admittedly computer-based and created by us, but which might evolve to become our equal or even surpass our intelligence and what we consider as sentience in the future.

On a more humorous note, Richard Yonek author of “Birth of the Machine: Our Future in a World of Artificial Emotional Intelligence,” (2017) imagines a moment in the future when a father is confronted with a daughter who is determined to marry an AI robot.

Something to think about don’t you think?

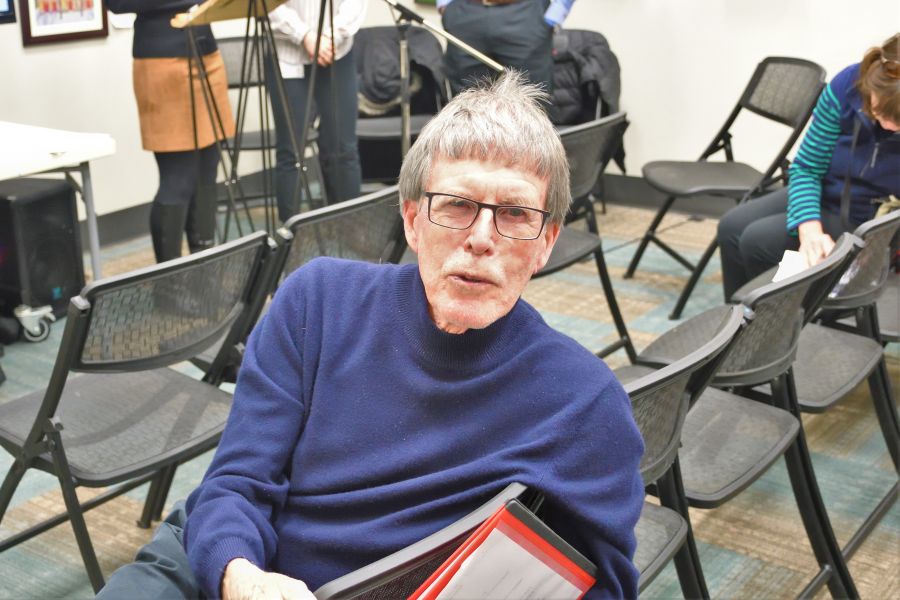

Dr. William Brown is a professor of neurology at McMaster University and co-founder of the InfoHealth series at the Niagara-on-the-Lake Public Library.