Muons are hardly the hot stuff of everyday conversation — unlike the media frenzy stocked by the volatile to and fro outbursts of politicians these days.

But it’s fair to say that long after the current verbal crossfire is over and no more than a footnote in history, muons will still be there playing their part in the subatomic universe of atoms, and hence molecules and everything that began within seconds of the Big Bang.

The Camelot years in physics from 1900 to 1930 sorted out the major players in the subatomic universe — protons, neutrons and electrons — and the next third of the century was spent identifying subatomic forces and a dizzying array of yet smaller particles before coming up with a Standard Model for the subatomic universe in the 1960s.

The latter turned out to be highly predictive for the existence of other particles, such as the Higgs and neutrino particles, later shown to exist by experiments.

But however predictive and precise the Standard Model may be in the everyday world of quantum mechanics, the model fails to solve some big puzzles, including the nature of dark matter — a glaring omission given that dark matter is thought to make up one-third of the universe and six times more abundant than ordinary matter with which we’re familiar day to day.

That’s where the muon comes in. Muons are similar to electrons but are more massive.

Because of their intrinsic magnetic properties, muons wobble in the presence of external magnetic fields, a measure of which wobble is called g and equals 2, where only muons are present.

However, according to quantum theory, empty space isn’t empty but jampacked with all kinds of “virtual” particles, popping in and out of existence, which could influence the value of g.

Hence, theorists suggest that measurements of g, which deviate from 2, might point to as yet unidentified particles and perhaps a window into how the Standard Model might be changed to explain enigmas such as dark matter and why some neutrinos have mass.

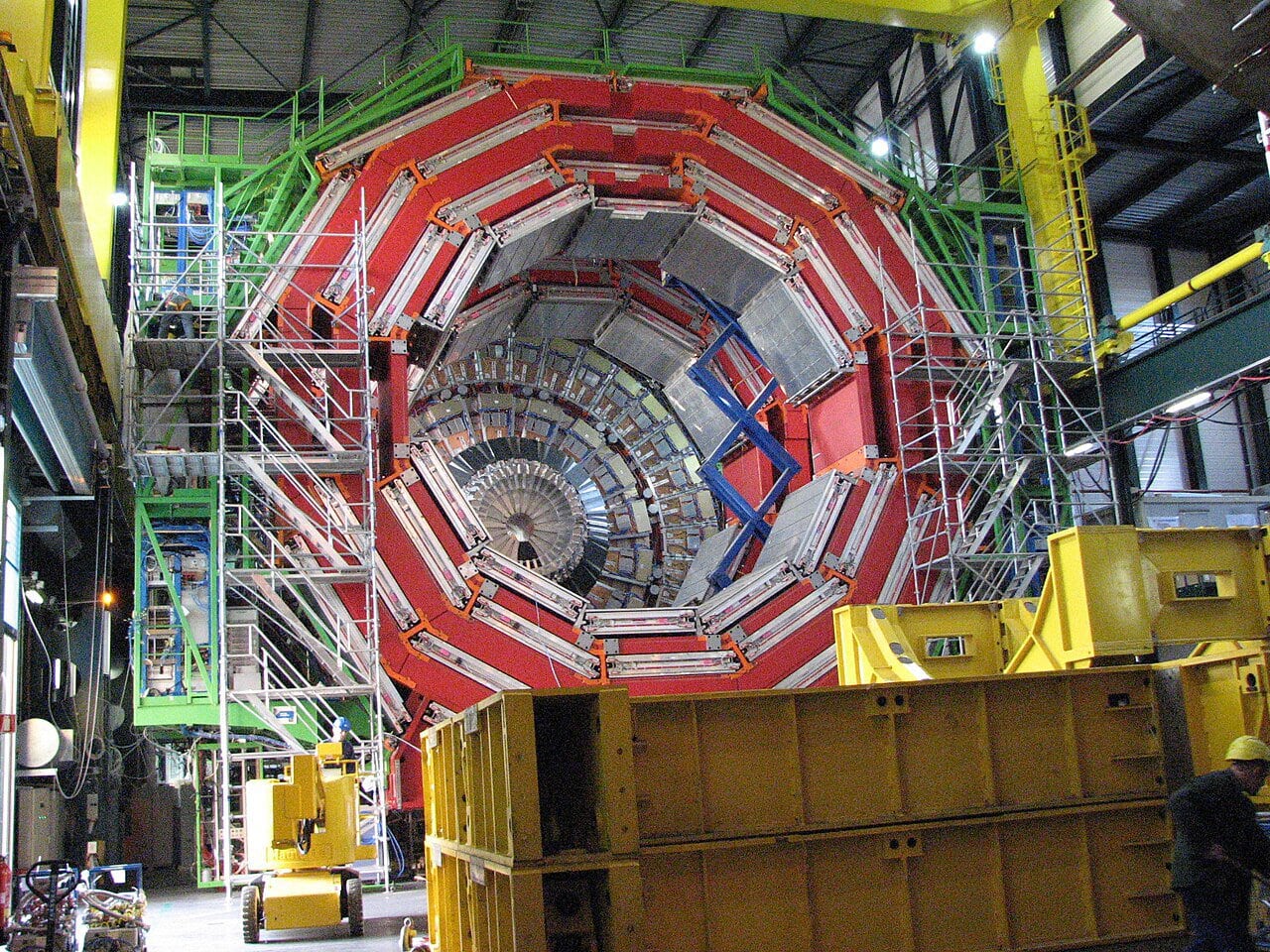

Sorting all this out is the business of experimentalists such as the physicists and engineers at the Fermi National Accelerator Laboratory (Fermilab) accelerator in Batavia, Ill., and theoretical physicists.

However, so far, the two groups don’t agree on the value of g with no clear way forward in sight. Which brings the obvious question. Why do these questions matter? Why invest more money on inconclusive results now and in the future?

Good questions. In the current climate of funding cuts, this is surely a justifiable cut — or is it?

Basic science — of which this study is a good example — is high risk and often expensive. High risk because programs often fail because the technology may not yet be capable of generating the answers needed.

This was certainly the case more than a decade ago when hugely expensive independent American and European projects were launched to map the brain in high resolution.

Both failed because mapping every functional type of cell and connection in the brain failed to translate into useful information about how the brain actually worked. Even the brains of insects like flies are enormously complex — never mind the human brain.

And it’s fair to say that the low-lying fruit of high-value, cheap projects is over — no more Einsteins working at patent offices with no university affiliation yet managing to come up with profound answers to fundamental questions in the universe.

Research in much of the early and middle 20th century was cheap — many instruments were handmade. Compare that with astronomy these days — telescope design, whether for space or land-based, manufacture, launch and maintenance, to say nothing of the scientists and engineers involved, are all very expensive.

So far, most programs have been highly successful, such as the James Webb telescope and luckily so far, continue to live to tell their tales.

The sciences of computer chip design and artificial intelligence have proven to be hugely successful. Indeed, without them, much of modern science and, increasingly, high-powered medicine would be impossible.

In the case of the Standard Model governing subatomic physics, it’s clear that the model has shortcomings and will need updating or perhaps even an entire rethink. But again, I must say, that’s how the best of science works.

Questions and hypotheses arise and must be tested, and if they don’t pass the test of experiment, then, as the famous American physicist Richard Feynman proclaimed, it’s time to go back to the drawing boards.

That’s a tough standard, but it has stood up well over the centuries. However, there’s a hitch.

Experiments have to be well imagined, tested and carried out — if not, too often science fails as it has for new drugs in Alzheimer’s disease, where the standards of design and execution have too often been mediocre, even deliberately misleading.

Back to those muons. I have no idea how or when the muon controversy will be resolved, but it’s worth the odd failure to achieve really meaningful science.

Science, after all, has perspective on its side. What is proven to be true will remain true in a century, a million years from now and beyond — even if, in the case of Newtonian physics, or perhaps general relativity or the Standard Model of the atom, it needs revision in the light of new evidence.

Dr. William Brown is a professor of neurology at McMaster University and co-founder of the InfoHealth series at the Niagara-on-the-Lake Public Library.