My reference point for questions like this is the Star Trek series, “The Next Generation.” One of the officers, suitably named Data, on the Starship Enterprise stands out.

He’s cognitively very bright and has super-human strength, but struggles to understand the nuances of human feelings and emotions.

All went well until a Starfleet officer, keen to understand how Data worked with an eye to making copies of him, claims she had the right to take him apart to study him without his permission because he isn’t a sentient being and thus not entitled to refuse.

The issue was solved by convening a court to explore whether Data was sentient and therefore entitled to refuse. After a long, gruelling touch-and-go trial, which explored all the issues and evidence, the court ruled that Data was sentient and as entitled, as any human, to refuse.

That mythological trial in the 1990s raised questions that philosophers, neuroscientists, computer designers, AI companies, ethicists and religious experts have asked since the dawn of AI in the 1950s: is AI capable of intelligence akin to humans and at some stage, could AI be conscious?

I like the answer expressed by Barbara Montero recently in the New York Times, Nov. 8, about whether AI is intelligent, in the article “AI Is on Its Way to Something Even More Remarkable Than Intelligence.”

She stated, “Today we have reached that point. AI is no less a form of intelligence than digital photography is a form of photography.”

Referring to consciousness, Montero states, “And now AI is on its way to doing something even more remarkable, becoming conscious. As we interact with increasingly sophisticated AI. We will develop a better and more inclusive conception of consciousness.” Makes sense to me.

AI is on a steep growth curve because of larger, better databases, improving algorithms, more powerful and faster computers, and learning from experience. That’s eerily similar to how the human brain learn.

First, the basic layout of the nervous system and circuitry necessary for basic functions such as rolling over, sitting, standing and walking, coupled with more fulsome interactions with caregivers and the immediate environment, followed by rapid learning of suites of new skills and understandings and so on in a process which goes on for a lifetime but fastest in the early decades of life.

That’s where nature and AI part company; AI continues to improve by leaps and bounds while the human capacity to learn begins to taper off in mid-life and faster in the later decades of life.

With the advent of practical quantum computers in the next few decades, the computational power of computers will be enormous and pose a threat to any jobs that are computationally and data-driven.

By that stage, the power of quantum computers will exceed the capabilities of their human creators and not necessarily for the good, especially if the underlying intelligence becomes autonomous.

After all, when devices become so talented that few if any humans, even working in groups, can keep up, never mind lead, where does that leave most humans, except what some call joe jobs, which most humans have always wished technology would free them from?

Then there’s the matter of hybrid AI-human systems. Already, several types of devices have been developed to restore speech to those who have lost it, or movement where there is paralysis.

What teams of scientists have been able to accomplish with relatively simple systems involving as few as 100 electrodes located in regions of the brain associated with speech to restore spoken and written speech is very impressive in the last five years.

Imagine the power similar hybrid systems may offer for restoring natural movement to those with movement disorders or paralysis within the coming decade. Hybrid systems like these are bright spots for AI.

But, to the question, is AI intelligent? Sure. It is, and becoming as intelligent or more than most humans.

Is AI sentient? Not yet, but on the way. And it won’t be long.

Think back to what’s happened over the last 125 years and project forward with an ever-quickening pace, to imagine how technology may impact our children and certainly grandchildren and beyond. The question is, where are the brakes if the pace becomes too fast, takes too many wrong turns, or begins to threaten how humans govern themselves?

Those are questions similar in urgency to that other looming threat, global climate change, both of which need prompt action.

However, progress on climate change is stumbling and on the technology front, there’s been little proactive action to prevent some of the scariest impacts of increasingly powerful AI.

The trouble with both threats is that meaningful action requires worldwide consensus and action and for as long as modern humans have been around, consensus has never been one of our species’ strong points.

Intelligent, yes; wise, no.

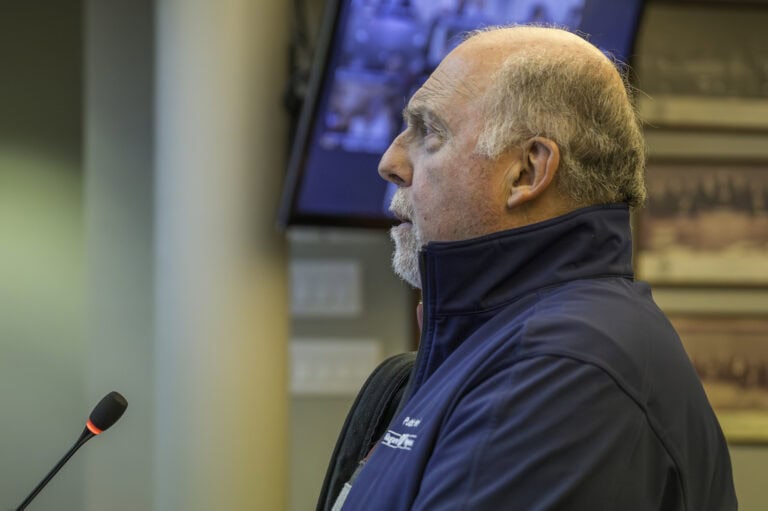

Dr. William Brown is a professor of neurology at McMaster University and co-founder of the InfoHealth series at the Niagara-on-the-Lake Public Library.