ChatGPT and equivalent artificial intelligence devices often capture the attention of the public because of the naturalness of the encounters, especially when human users and AI devices chat with each other.

One example of which was recently illustrated in the New York Times by a driver talking to his AI therapist through an iPhone perched on the car’s dashboard. Without the video, the conversation could well have taken place in an office between a human therapist and patient.

That’s what was so mesmerizing, even shocking, to me about the clip — its very naturalism. As if the driver was talking to a real human therapist who listened, empathized and offered advice when prompted — and which, by tone and content, was almost human.

The content and naturalness of the encounter were a product of a computer loaded with a large data base of information in this case about psychotherapy and large language models capable of sounding, well, like you and I — hence the naturalness.

The versions of AI we experience in the form of ChatGPT, and similar products developed by companies such as Google, are far from perfect. They makes mistakes and even make stuff up — so-called hallucinations.

Mistakes are usually related to the quality and source of the database, where especially in the case of health care, databases are based on “freely available” information on the internet, which are not checked for accuracy and can be misleading, even wrong.

For medicine, databases should be based on the best information available within quality health-care systems such as Harvard or the Mayo systems where there’s some measure of control over the quality of the data, while recognizing that they too make mistakes which might be incorporated in the system.

High-quality health-care systems can also afford to pay for data published in the best not-for-free journals and textbooks. But again, even best sources make mistakes and in some instances in medicine, there may be no “right” answers.

In the public sphere, AI’s prowess mastering qualifying examinations in medicine have reached the point where AI regularly matches or exceeds human performance and for mathematics, challenge even the best college-level mathematicians, as AI companies are quick to draw the public’s attention to as evidence of their growing prowess in developing AI.

Some mathematicians criticize AI’s success in solving complex problems because sometimes it’s not obvious how AI solved the problem.

The same might be said for Max Planck, Albert Einstein, Werner Heisenberg and Erwin Schrodinger, whose math may have been excellent, but who sometimes struggled to understand the implications of their mathematical solutions.

One perfect example of this was Einstein’s stubborn refusal to acknowledge that his masterpiece — general relativity — predicted an expanding universe, not an unchanging universe as he philosophically much preferred.

In 2025, Science and Nature, both high profile, high standard science journals, ran several articles on the 100th anniversary of quantum mechanics. Many of the equations which underpin quantum mechanics are highly precise and predictive, yet most physicists in the modern era continue to argue about the meaning of the equations they use every day in the workaday practical world of quantum physics.

The response of some human critics is often to disparage AI because it makes mistakes and hallucinates while ignoring the common tendency of humans to also make stuff up and embellish their own stories and claims. But it’s important to remember that AI is in its infancy.

Sure, there are lots of shortcomings and even mistakes with AI, but given improving databases and computational methods, AI continues to rapidly evolve. The potential for AI to do so is enormous.

Steven Weinberg, a particle physicist and Nobel laureate, claimed there were limits to human intelligence, at least for individuals. The collective intelligence of groups of humans working on problems is much greater but probably will fall well short of where AI’s achievements could be in decades to come and certainly no match for quantum computers once the bugs are worked out.

For now, AI is essential in the worlds of engineering and science. How else could data collected by powerful telescopes such as the Webb and, most recently, Rubin telescopes operate which require analyzing masses of data?

AI and quantum computers may be creatures of human intelligence but this time around humans have created a form of intelligence, which already exceeds humans intelligence for specific tasks and worryingly is on track to achieving levels of general intelligence exceeding humans.

Constraining AI in the future is a hopeless task. AI will far exceed its human creators well before this half century is out with no option for accelerating human intelligence except perhaps by risky gene editing or possibly human-AI hybrids.

Some see AI as an existential threat for humans now and in the near future. If so, that future can’t be controlled by companies, or even countries, without international agreements with enforcement teeth.

Unfortunately, enforceable controls simply aren’t possible for tribalistic humans, as we’ve recently witnessed in our response to climate change and attempts to resolve intractable conflicts like Ukraine, the Middle East and the looming threat of a Chinese forceful takeover of Taiwan.

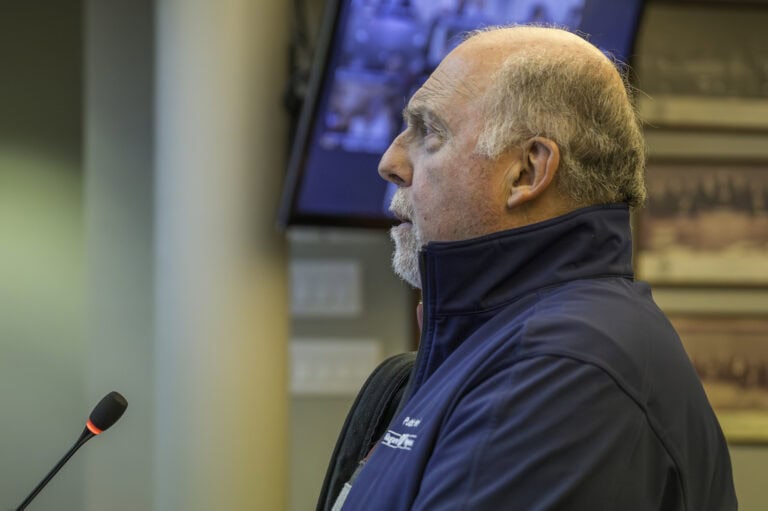

Dr. William Brown is a professor of neurology at McMaster University and co-founder of the InfoHealth series at the Niagara-on-the-Lake Public Library.